The Role of Edge Computing in Digital Twin Data Processing

Decentralized Approach to Data Processing

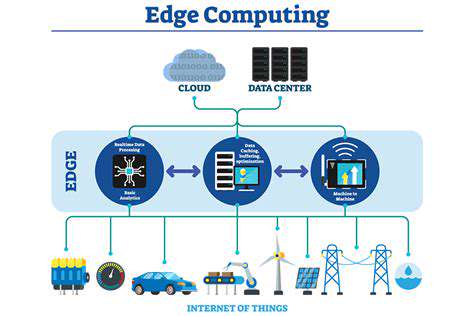

Edge computing represents a significant shift from traditional cloud-based architectures. Instead of sending all data to a central server for processing, edge computing brings the processing power closer to the source of the data. This decentralized approach offers several advantages, including reduced latency and improved responsiveness. By processing data at the edge, edge computing systems can significantly reduce the time it takes to respond to events and requests. This is particularly crucial in time-sensitive applications, such as industrial automation, autonomous vehicles, and real-time video streaming. This localized processing also reduces the strain on the central network infrastructure.

The distributed nature of edge computing also enhances resilience and fault tolerance. If one edge node fails, the system can still function because processing happens at multiple points. This redundancy helps to ensure continuous operation, even in the event of network disruptions or outages. The decentralized approach is also more secure because sensitive data doesn't need to travel across potentially vulnerable networks to a central location. This increased security is crucial in applications where data privacy and confidentiality are paramount.

Key Benefits and Applications

One of the most significant benefits of edge computing is the reduction in latency. Data processing occurs closer to the source, minimizing the time it takes to receive and respond to data. This reduced latency is essential for applications requiring real-time responses, such as autonomous vehicles that need to react to changing conditions instantly. This is especially true for industrial settings, where control systems must respond to events in milliseconds to maintain safety and efficiency.

Another key advantage is improved bandwidth efficiency. By processing data locally, edge computing reduces the amount of data that needs to be transmitted across the network. This frees up bandwidth for other crucial tasks and reduces the overall cost of network infrastructure. This efficiency is especially valuable in remote locations or areas with limited network connectivity.

Edge computing has a wide range of applications across diverse industries. From industrial automation and smart cities to healthcare and financial services, edge computing is revolutionizing the way we collect, process, and use data. The ability to collect and process data quickly and effectively is transforming numerous sectors.

The potential for edge computing to improve efficiency and responsiveness makes it a game-changer in various sectors. From smart factories to autonomous vehicles, the benefits are evident.

Real-Time Analysis and Decision-Making with Edge Computing

Real-time Data Acquisition

Real-time analysis hinges on the ability to quickly and reliably gather data from various sources. This encompasses everything from sensor readings to market fluctuations, social media trends, and more. Accurate and timely data ingestion is critical for effective analysis and subsequent decision-making. Efficient data pipelines are essential to ensure that the flow of information is uninterrupted and that data is prepared for processing in a timely manner.

Data acquisition systems need to be robust and scalable to handle the volume and velocity of modern data streams. This often requires specialized hardware and software solutions tailored to specific data sources and formats. Reliable data capture is the cornerstone of any successful real-time analysis initiative.

Data Preprocessing and Transformation

Raw data is rarely in a format suitable for immediate analysis. Preprocessing steps are crucial to cleanse, transform, and prepare the data for analysis algorithms. This might involve handling missing values, correcting errors, normalizing data, and converting formats to ensure consistency and accuracy. Data quality is paramount for reliable insights and informed decisions.

Data transformation involves converting the data into a suitable format for specific analysis techniques. This could involve aggregating data, creating derived variables, or applying mathematical operations. Effective transformations are essential to extract meaningful information from the data and to prepare it for further analysis.

Algorithm Selection and Implementation

Choosing the right algorithm is critical for deriving meaningful insights from the real-time data. The selection process should consider the nature of the data, the desired outcome, and the computational resources available. Different algorithms excel at different types of analysis, such as forecasting, classification, and pattern recognition.

Implementing the chosen algorithms requires careful consideration of performance and scalability. Efficient coding practices and optimized libraries are essential for processing large volumes of data in real-time. The efficiency of the algorithm implementation directly impacts the speed of decision-making.

Real-time Monitoring and Feedback

Real-time analysis isn't a one-time process; it requires continuous monitoring and feedback mechanisms. Systems need to be able to track the performance of the analysis process and provide alerts or notifications when anomalies or deviations are detected. This allows for immediate adjustments and responses to changing conditions.

Real-time feedback loops are crucial for identifying and addressing potential issues quickly. This iterative approach ensures that the analysis process remains effective and relevant in dynamic environments. Adaptive systems are essential for maintaining accuracy and relevance in real-time analysis.

Decision Support and Visualization

The results of real-time analysis should be presented in a clear and concise manner, ideally through interactive visualizations. Visualizations allow stakeholders to quickly grasp the key insights and trends derived from the data. This helps in making informed decisions in a timely manner.

Decision support systems should provide clear recommendations based on the analysis. These recommendations should be presented in a way that is easily understandable and actionable. Effective visualization and clear decision support are key to leveraging real-time analysis for effective action.

Scalability and Maintainability

Real-time analysis systems must be designed with scalability in mind. They need to be able to handle increasing data volumes and processing demands without compromising performance. A scalable architecture is essential to accommodate future growth and changing requirements.

Maintaining the system over time is crucial. Clear documentation, well-defined processes, and robust testing procedures are necessary to ensure long-term viability and effectiveness. Regular updates and maintenance are essential to keep the system running smoothly and to adapt to changes in the data sources and analysis requirements.

Security and Privacy Considerations

Real-time analysis often involves sensitive data, requiring robust security measures to protect against unauthorized access and breaches. Data encryption, access controls, and regular security audits are crucial to maintaining data integrity and confidentiality. Protecting sensitive data is essential for maintaining trust and compliance.

Privacy regulations and policies must be carefully considered when handling real-time data. Data anonymization and compliance with privacy laws are critical to ensure ethical and responsible use of data. Adhering to privacy standards is vital for maintaining public trust and avoiding legal ramifications.

Security and Privacy Considerations in Edge Computing for Digital Twins

Data Security at the Edge

Protecting data at the edge is paramount for digital twins, as sensitive information often resides and is processed at the point of collection. This necessitates robust encryption methods throughout the data lifecycle, from initial capture to storage and transmission. Implementing secure communication protocols, such as TLS/SSL, is crucial to prevent eavesdropping and unauthorized access during data transmission between edge devices and the cloud. Furthermore, access controls and authentication mechanisms must be implemented to restrict access to sensitive data based on user roles and permissions, ensuring only authorized personnel can view and manipulate critical information.

Data integrity is equally important. Mechanisms for verifying data authenticity and detecting any tampering or corruption during transit or storage are vital. Hashing algorithms can be employed to create unique fingerprints of data, enabling quick detection of any alterations. Regular audits and security assessments are also necessary to identify vulnerabilities and ensure ongoing compliance with security regulations.

Privacy Preserving Data Collection

Digital twins often collect large amounts of data, including potentially sensitive personal information. Adherence to privacy regulations, such as GDPR and CCPA, is essential. Data minimization principles should be applied, collecting only the data necessary for the digital twin's function. Anonymization and pseudonymization techniques can be used to protect individual identities while maintaining the value of the data for analysis.

Transparency about data collection practices is crucial. Users should be informed about what data is being collected, how it is used, and with whom it is shared. Clear and concise privacy policies, readily available to users, are essential to build trust and demonstrate a commitment to responsible data handling.

Secure Communication Channels

Reliable and secure communication channels are critical for transmitting data between edge devices and the cloud. This includes implementing robust authentication mechanisms to verify the identity of communicating parties. Using encryption protocols to protect data during transit is essential to prevent unauthorized access and data breaches. Ensuring the integrity of the data during transmission is also important, using methods to detect any unauthorized modification or tampering.

Physical Security of Edge Devices

The physical security of edge devices is often overlooked but is just as crucial as the security of the data itself. Protecting edge devices from physical theft, damage, or unauthorized access is paramount. Implementing robust physical security measures, such as secure enclosures, access controls, and regular security audits, is essential to safeguarding the integrity of the data and the operational functionality of the digital twin. Consideration should also be given to environmental factors that could impact the reliability of the devices, such as extreme temperatures or power outages, as these can compromise data integrity and system availability.

Scalability and Adaptability of Security Measures

As digital twins evolve and expand, the security measures must adapt and scale accordingly. Security solutions should be designed with scalability in mind, enabling them to accommodate the increasing volume of data and the growing complexity of the system. The ability to dynamically adjust security protocols based on changing threats and vulnerabilities is also vital. Regular security updates and patches are crucial to address any emerging vulnerabilities and ensure the continued protection of the digital twin infrastructure. Integrating automated security monitoring and response systems is essential to detect and mitigate threats in real-time.

- Why hydration is crucial for active dogs

- How to build trust with a rescue dog

- What every dog owner should know about dental health

- How to help your dog stay cool during summer hikes

- How to introduce your dog to grooming routines

- How to modify exercises for an older dog

- Common health issues in senior dogs and prevention tips

- How to care for your dog’s paw pads in icy conditions

- The most common parasites affecting dogs and how to prevent them

- AI for Anomaly Detection in Supply Chain Cybersecurity

- Real Time Tracking for Pharmaceutical Products

- Digital Twin for Enhanced Collaboration and Data Sharing