レポート自動化:生成AIによるサプライチェーン洞察

Data Sources and Integration

Automating data aggregation involves identifying and connecting various data sources, from databases and spreadsheets to APIs and cloud-based applications. A crucial aspect of this process is ensuring data consistency and compatibility across different systems. This often requires transforming data into a standardized format, handling potential inconsistencies in data types, and resolving discrepancies in naming conventions. Effective data integration is the foundation upon which reliable and accurate reporting can be built.

Properly mapping fields between different data sources is essential for accurate aggregation. This involves understanding the relationships between data elements in each source and establishing clear mappings to ensure that the correct data points are combined. Furthermore, robust error handling mechanisms are needed to manage missing or invalid data, preventing errors from propagating through the aggregation process and affecting reporting accuracy.

Data Cleaning and Preprocessing

Raw data often contains errors, inconsistencies, and inaccuracies. A critical step in automating data aggregation is the implementation of data cleaning and preprocessing procedures. These procedures involve identifying and correcting errors, handling missing values, and transforming data into a usable format for analysis. This step is crucial for ensuring the accuracy and reliability of the aggregated data.

Techniques like data validation and imputation are frequently employed. Data validation helps to identify and correct inconsistencies, ensuring that data conforms to predefined rules and standards. Imputation methods are used to fill in missing data points, either by using statistical techniques or by employing expert knowledge to estimate plausible values. Efficient data cleaning minimizes the risk of inaccurate reporting and improves the overall quality of the analysis.

Automated Aggregation Logic

Defining the specific logic for aggregating data is a key component of automation. This involves outlining the calculations and transformations needed to combine data from various sources into meaningful summaries. This includes defining metrics, aggregations (like sums, averages, or counts), and filtering criteria to extract relevant subsets of data for specific reporting needs. A well-defined aggregation logic ensures that reports consistently reflect the desired information.

Reporting Platform Integration

Integrating the automated data aggregation process with a reporting platform is essential for efficient delivery of insights. This integration often involves creating custom dashboards or reports that automatically update with the latest aggregated data. The reporting platform needs to support the specific data formats and visualizations required by the users. This ensures that the aggregated data is easily accessible and presented in a format that is readily understandable and actionable.

Furthermore, automated data delivery mechanisms, such as email notifications or scheduled reports, are crucial for providing timely and consistent updates. This helps users stay informed about key performance indicators and trends without manual intervention. A well-integrated reporting platform facilitates the dissemination of actionable insights and supports strategic decision-making.

Security and Governance

Ensuring the security and governance of the automated data aggregation process is paramount. Robust access controls and data encryption protocols are critical to protect sensitive information from unauthorized access or misuse. Data governance policies should be established to define roles, responsibilities, and procedures for managing data quality, accuracy, and integrity. This includes defining clear guidelines for data validation, access permissions, and compliance with relevant regulations (like GDPR or HIPAA).

Auditing and monitoring the automated processes is critical to detect any potential issues or anomalies. This includes tracking data transformations, identifying errors, and ensuring the integrity of the aggregated data. Establishing appropriate logging mechanisms and implementing regular audits helps maintain a high level of transparency and accountability throughout the entire process.

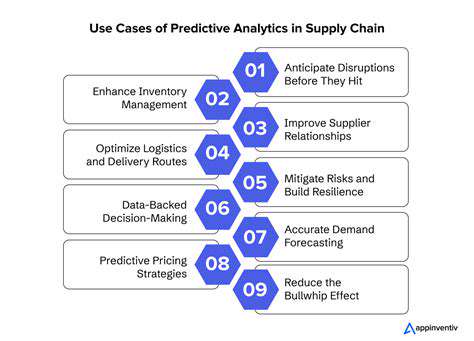

Predictive Analytics for Proactive Supply Chain Management

Predictive Modeling Techniques

Predictive analytics relies on a variety of powerful modeling techniques to forecast future outcomes. These techniques range from simple linear regression to more complex algorithms like machine learning models, each with its own strengths and weaknesses. Understanding which technique is most suitable for a specific problem is crucial for accurate predictions and actionable insights. Choosing the right model depends heavily on the nature of the data and the desired outcome.

Data Preparation and Feature Engineering

A critical aspect of successful predictive analytics is the meticulous preparation of the data. This involves cleaning, transforming, and selecting relevant features from the dataset. Data cleaning often involves handling missing values, outliers, and inconsistencies to ensure data quality. Feature engineering involves creating new features from existing ones to better capture the relationships within the data, which can significantly improve model performance.

Model Training and Evaluation

Once the data is prepared, the models are trained using the training dataset. This process involves adjusting the model's parameters to optimize its performance. Crucial to this process is the evaluation of the model's performance to ensure it accurately predicts future outcomes. This often involves splitting the data into training and testing sets to assess the model's ability to generalize to new, unseen data.

Deployment and Monitoring

After a model is trained and evaluated, it needs to be deployed into a production environment to generate predictions in real time. This typically involves integrating the model with existing systems and processes. Continuous monitoring of the model's performance is essential to ensure its accuracy and relevance over time. As new data becomes available, models may need to be retrained or updated to maintain their effectiveness.

Business Applications of Predictive Analytics

Predictive analytics offers a wide range of practical applications across various industries. In finance, it can be used to forecast market trends, assess credit risk, and manage investment portfolios. In healthcare, it can predict patient outcomes, identify high-risk individuals, and personalize treatment plans. These are just a few examples of the diverse applications of predictive analytics.

Ethical Considerations and Bias Mitigation

Implementing predictive analytics requires careful consideration of the potential ethical implications. Models trained on biased data can perpetuate and even amplify existing societal biases, leading to unfair or discriminatory outcomes. Therefore, it is crucial to address potential biases in the data and model training process to ensure fairness and accountability. Developing transparent and explainable models is also essential for building trust and understanding.